Comprehensive Assessment of Neural Network Synthetic Training Methods using Domain Randomization for Orbital and Space-based Applications

Abstract

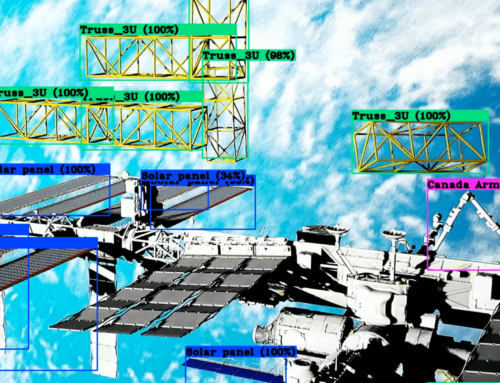

The continued advancement of neural networks and other deep learning architectures have fundamentally changed the definition of “State of the art” (SOTA) in a wide and ever-growing range of disciplines. Arguably the most impacted field of study is that of computer vision, providing flexible and general function-approximation frameworks capable of accurately and reliably performing object identification and classification on a wide range of image datasets. However, the impressive gains achieved by deep learning methods come at a cost. The incredibly large number of images required to train a deep network makes them prohibitive for certain applications where large image datasets are limited or simply do not exist. Collecting the required image data is often too expensive, too dangerous, or too cumbersome to gather for many problem sets. Space-based applications are a perfect example of an imagery limited domain due to its complex and extreme environment. Conversely, breakthroughs in space-based computer vision applications would enable a wide range of fundamental capabilities required for the eventual automation of this critical domain, including robotics-based construction and assembly, repair, and surveying tasks of orbital platforms or celestial bodies. To bridge this gap in capability researchers have started to rely upon 3D rendered synthetic image datasets generated from advanced 3D rasterization software.

Generating synthetic data is only step one. Space-Based computer vision is more complex than traditional terrestrial tasks due to the extreme variances encountered that can confuse or degrade optical sensors and CV and ML algorithms. These include orientation and translation tumble in all axes, inability for a model to orient on a horizon, and extreme light saturation on lit sides of celestial bodies and occlusion on dark sides and in shadows. To produce a more reliable and robust result for computer vision architectures, a new method of synthetic image generation called domain randomization has started to be applied to more traditional computer vision problem sets. This method involves creating an environment of randomized patterns, colors, and lighting while maintaining rigid structures for objects of interest. This may prove a promising solution to the variance space-based problem. This paper explores computer vision, domain randomization, and the necessary computational hardware required to apply them to space-based applications.

Findings

Given the promising applications of CNNs based computer vision architectures, Synthetic Data Generation, and Domain Randomization, applying these technology’s to space-based problem sets may prove to be a mission essential end-to-end solution needed to achieve truly autonomous on orbit robotics capability.