Optical Satellite/Component Tracking and Classification via Synthetic CNN Image Processing for Hardware-in-the-Loop testing and validation of Space Applications using free flying drone platforms

Abstract

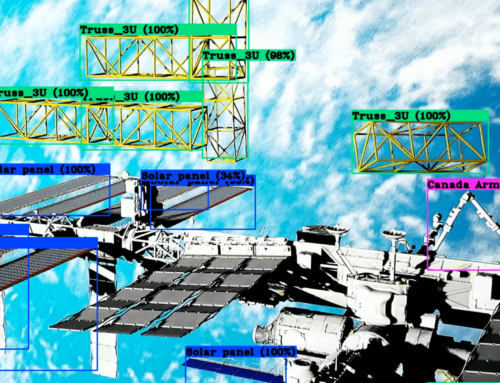

The proliferation of reusable space vehicles has fundamentally changed how we inject assets into orbit and beyond, increasing the reliability and frequency of launches. Leading to the rapid development and adoption of new technologies into the Aerospace sector, such as computer vision (CV), machine learning (ML), and distributed networking. All these technologies are necessary to enable genuinely autonomous decision-making for space-borne platforms as our spacecraft travel further into the solar system, and our missions sets become more ambitious, requiring true “human out of the loop” solutions for a wide range of engineering and operational problem sets. Deployment of systems proficient at classifying, tracking, capturing, and ultimately manipulating orbital assets and components for maintenance and assembly in the persistent dynamic environment of space and on the surface of other celestial bodies, tasks commonly referred to as On-Orbit Servicing and In Space Assembly, have a unique automation potential. Given the inherent dangers of manned space flight/extravehicular activity (EVAs) methods currently employed to perform spacecraft construction and maintenance tasking, coupled with the current limitation of long-duration human flight outside of low earth orbit, space robotics armed with generalized sensing and control machine learning architectures is a tremendous enabling technology. However, the large amounts of sensor data required to adequately train neural networks for these space domain tasks are either limited or non-existent, requiring alternate means of data collection/generation. Additionally, the wide-scale tools and methodologies required for hardware-in-the-loop simulation, testing, and validation of these new technologies outside of multimillion-dollar facilities are largely in their developmental stages. This dissertation proposes a novel approach for simulating space-based computer vision sensing and robotic control using both physical and virtual reality testing environments. This methodology is designed to both be affordable and expandable, enabling hardware-in-the-loop simulation and validation of space systems at large scale across multiple institutions. While the focus of the specific computer vision models in this paper are narrowly focused on solving imagery problems found on orbit, this work can be expanded to solve any problem set that requires robust onboard computer vision, robotic manipulation, and free flight capabilities.

Findings

This research intersected many disciplines requiring a working knowledge of several fields including software engineering, robotics, computer vision, machine learning, fabrication, animation, theater projection, and visual design. All culminating in a successful deployment of onboard automated tasking via computer vision and sensor localization for the purposes of automated robotic tasking and object capture/manipulation for in-space assembly or on orbit servicing implementations. This dissertation has demonstrated the applicability of Convolutional Neural Networks, synthetic data generation, and domain randomization to detect various orbital objects of interest for In-space assembly (ISA) on-orbit servicing (OOS) operations in simulated environments, achieving a mean average precision within 11\% of none synthetically trained models for a rather difficult array of geometrically similar objects. That simulated environment was then pulled out of a relatively small computer screen and projected into a large 21,000 cubic meters space for large scale visual simulation, enabling researches to visually interact with optical sensors as well as physically interact with real world mocks ups with in a visual simulation environment with real world hardware. This solution has opened the door for hardware-in-the-loop computer vision testing, deployed on real world free flying hardware with environments that are difficult to capture or recreate, or safely operate hardware in a controlled testing regime. We compared the capabilities of the current SpaceDrones Architectures with recent literature, detailing the combination of many areas of study to solve a critical need with in the space industry. However, the problem of hardware-in-the-loop testing for any number of parameters or environmental factors is not on specific to the aerospace community. This simulation solution can and should be applied to any number of research, search and rescue, defence, and general automation applications.