SpaceDrones 2.0—Hardware-in-the-Loop Simulation and Validation for Orbital and Deep Space Computer Vision and Machine Learning Tasking Using Free-Flying Drone Platforms

Abstract

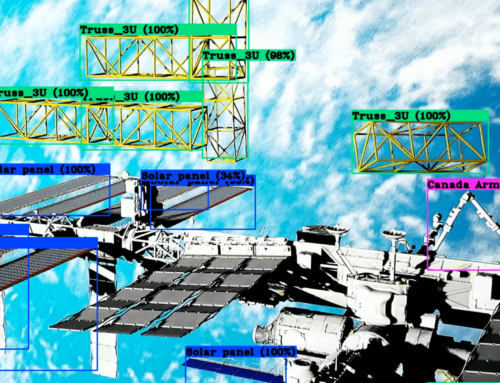

The proliferation of reusable space vehicles has fundamentally changed how assets are injected into the low earth orbit and beyond, increasing both the reliability and frequency of launches. Consequently, it has led to the rapid development and adoption of new technologies in the aerospace sector, including computer vision (CV), machine learning (ML)/artificial intelligence (AI), and distributed networking. All these technologies are necessary to enable truly autonomous “Human-out-of-the-loop” mission tasking for spaceborne applications as spacecrafts travel further into the solar system and our missions become more ambitious. This paper proposes a novel approach for space-based computer vision sensing and machine learning simulation and validation using synthetically trained models to generate the large amounts of space-based imagery needed to train computer vision models. We also introduce a method of image data augmentation known as domain randomization to enhance machine learning performance in the dynamic domain of spaceborne computer vision to tackle unique space-based challenges such as orientation and lighting variations. These synthetically trained computer vision models then apply that capability for hardware-in-the-loop testing and evaluation via free-flying robotic platforms, thus enabling sensor-based orbital vehicle control, onboard decision making, and mobile manipulation similar to air-bearing table methods. Given the current energy constraints of space vehicles using solar-based power plants, cameras provide an energy-efficient means of situational awareness when compared to active sensing instruments. When coupled with computationally efficient machine learning algorithms and methods, it can enable space systems proficient in classifying, tracking, capturing, and ultimately manipulating objects for orbital/planetary assembly and maintenance (tasks commonly referred to as In-Space Assembly and On-Orbit Servicing). Given the inherent dangers of manned spaceflight/extravehicular activities (EVAs) currently employed to perform spacecraft maintenance and the current limitation of long-duration human spaceflight outside the low earth orbit, space robotics armed with generalized sensing and control and machine learning architecture have a unique automation potential. However, the tools and methodologies required for hardware-in-the-loop simulation, testing, and validation at a large scale and at an affordable price point are in developmental stages. By leveraging a drone’s free-flight maneuvering capability, theater projection technology, synthetically generated orbital and celestial environments, and machine learning, this work strives to build a robust hardware-in-the-loop testing suite. While the focus of the specific computer vision models in this paper is narrowed down to solving visual sensing problems in orbit, this work can very well be extended to solve any problem set that requires a robust onboard computer vision, robotic manipulation, and free-flight capabilities.

Findings

This capability requires a working knowledge of several different disciplines, including software engineering, robotics, computer vision, machine learning, fabrication, animation, theater projection, and visual design—all culminating in a successful deployment of automated robotic tasking and object capture/manipulation via onboard computer vision and sensor localization. This dissertation has demonstrated the applicability of Convolutional Neural Networks, synthetic data generation, and domain randomization to detect various orbital objects of interest for In-Space Assembly (ISA) On-Orbit servicing (OOS) operations in simulated environments, achieving a mean average precision within 11\% of non-synthetically trained models for a rather difficult array of geometrically similar objects. The simulated environment was then pulled out of a relatively small computer screen and projected onto a large 21,000-cubic-meter space for large-scale visual simulation, thus enabling researchers to interact with real-world hardware within a virtual environment. This solution has opened the door for hardware-in-the-loop computer vision testing onboard a real-world free flying hardware in environments that are difficult to capture or recreate or safely operating hardware in a controlled testing regime. This system will only continue to improve as more capabilities (detailed below in the future work section) are brought online. However, the problem of hardware-in-the-loop testing for any number of parameters or environmental factors is not specific to the aerospace community. This simulation solution can be applied to any number of research areas, including search and rescue, defense, and general automation applications.