A Full Distributed Multipurpose Autonomous Flight System Using 3D Position Tracking and ROS

Abstract

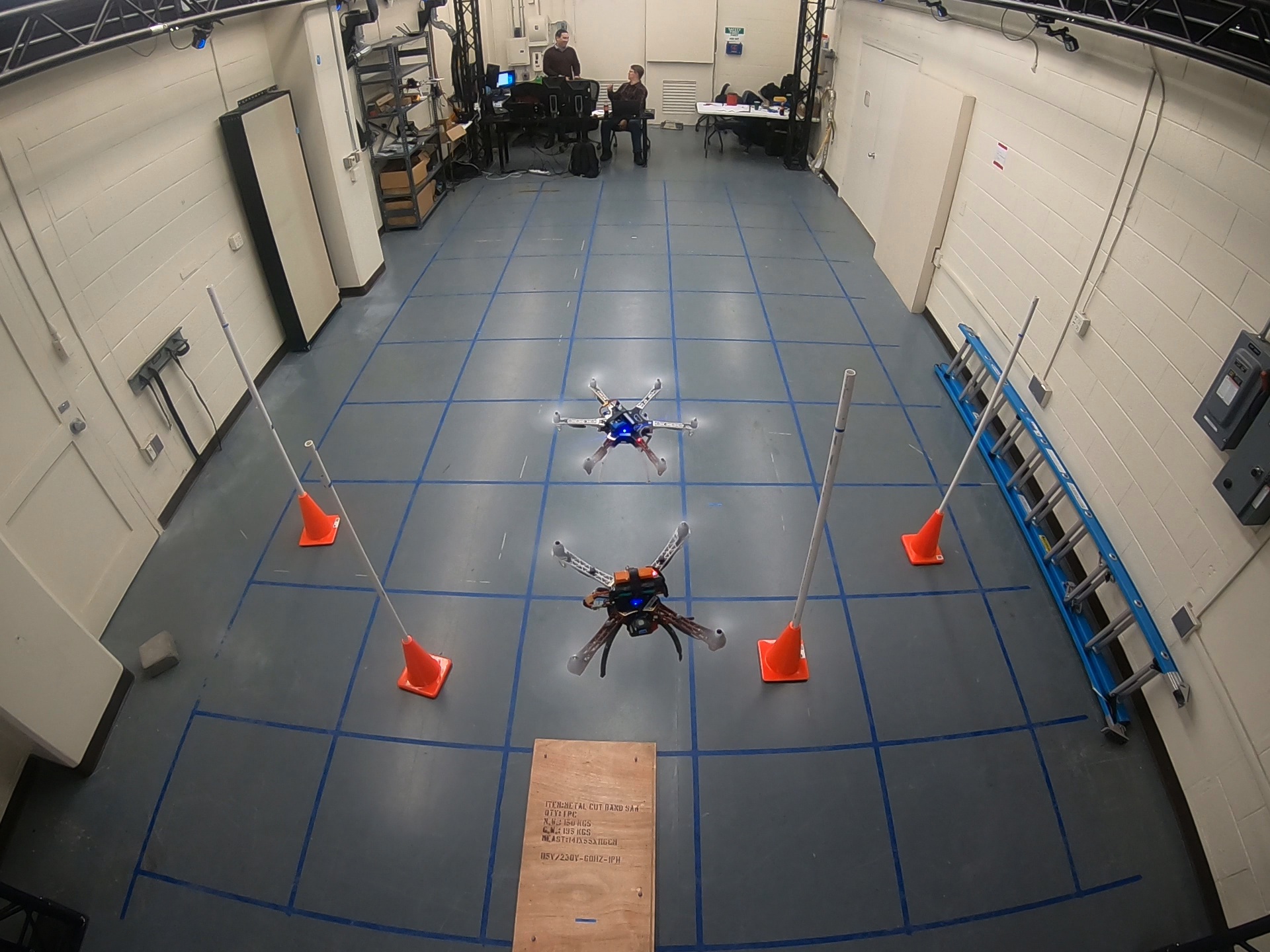

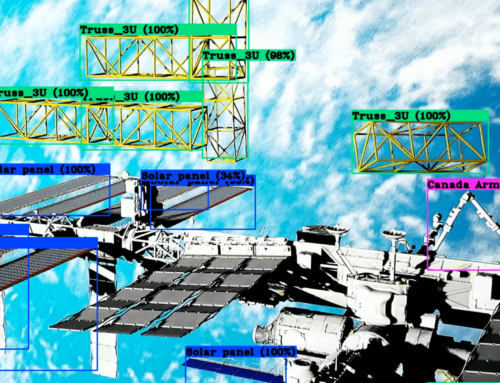

This document describes an approach to develop a fully distributed multipurpose autonomous flight system. With a set of hardware, software, and standard flight procedures for multiple unmanned aerial vehicles (UAV), it is possible to achieve a relative low-cost plug and play fully-distributed architecture for multipurpose applications. The resulting system comprises an OptiTrack motion capture system, a Pixhawk flight controller, a Raspberry Pi companion computer, and the Robotic Operating System (ROS) for internode communication. The architecture leverages a secondary PID controller with the use of MAVROS, an open-source Python plugin for ROS, for onboard processing and interfacing with the flight controller. Featuring a procedure that receives the position vector from Optitrack System and returns the desired velocity vector for each time-step. This facilitates ease of integration for researchers. The result is a reliable, easy to use an autonomous system for multipurpose engineering research. To demonstrate its extensiveness, this paper shows experiments of a robotics navigation experiment utilizing the fundamentals of Markov Decision Processes (MDP) running at 60Hz, Wireless and with a network latency below 2ms. This paper reasons why fully distributed systems should be embraced as it maintains the reliability of the system with lower cost and easier implementation for the ground station. Combined with an intelligent choice approach for developing software architecture, it encourages and facilitates the use of autonomous systems for transdisciplinary research.

Findings

While implementing a system as described here requires some up-front cost and effort, the benefits to researchers are obvious. The time frame to set up all hardware and implement the software is around 2 weeks. The encapsulation of the PID control inside the decision-making process of the agent with the aid of an API manual enables researchers to work separately with their scripts before coming to the laboratory. Moreover, all that is required to execute a new experiment is to copy the relevant files into the UAV companion computer and execute the python script.

Using a wireless connection from their personal computer, researchers can access the UAV companion computer directly to modify their code between runs. Currently, all data is saved in a CSV type file with all the objects’ positions, orientations, velocities, and decision making process data. This data is saved at the frequency of the system, currently 60 Hz, individually by the UAV and ground station referencing a synchronized ROS clock. Due to the ease of modification and ready production of data, time spent by each researcher in the laboratory is minimal; the potential for higher use rates and multidisciplinary research are significant advantages of this approach.

The limits of this architecture have yet to be explored. Results demonstrate that an update frequency of 60Hz synchronized through all independent entities on the system is reasonable for small numbers of agents. This was achieved while maintaining a lower network latency below 5ms with multiple agents fling at the same time. The system accepts running at higher frequencies up to 240Hz; however, further exploration of latency needs to be done when operating larger numbers of agents.

The combination of OptiTrack motion capture camera arrays, ROS communication protocols, and distributed Raspberry Pi / PixHawk controllers has proved to be a powerful and flexible tool for experimentation. This project successfully applied this tool to 2D navigation in a 3D space, providing experimental validation of onboard path planning algorithms. The success and relative ease of operation of this approach should prove encouraging to researchers who wish to see their work emerge from the simulation into the physical domain.